Being accused of a crime and awaiting your sentence in the aftermath is one of the most difficult ordeals a person can go through.

Here’s a hypothetical: the hearing date is here, and the judge listens to your case patiently. They consider all the details of the incident, carefully assessing the risks associated. In the end, the verdict is in your favor and you’re granted bail.

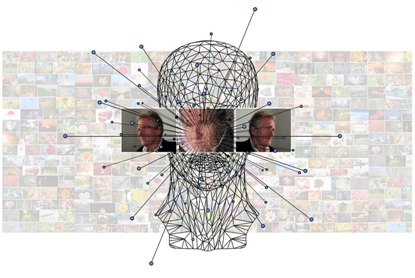

Now, here’s an alternative scenario. The court leaves it to a computer algorithm to decide whether or not you’ll commit the same crime again. Contrary to better judgment, the algorithm declares you guilty because it has a bias against certain skin color or race—even if it is an understated one.

Yes, computer algorithms can be racially biased. Here’s how:

Racial biases in the pretrial risk assessment

Replacing human judgment with computer algorithms to estimate risk is far from a fair system. As per a study, calculated risk assessments attribute higher scores to black defendants compared to white counterparts.

This is because crime prediction tools use race and nationality as deciding factors. This means that a defendant’s racial origin has an important role to play. The algorithm correlates the defendant’s probability of flight with that of all other defendants belonging to the same race. So if you’ve been accused of a crime, the algorithm will declare you high-risk, even if you don’t carry any such threat—just because a couple of fellow citizens do.

In short, racial disparity is inevitable in this case. The risk score is either equally predictive for all races, or wrong for all of them. There is no in-between. It will never take into account your individual history, story, or opinion.

The way forward

Here is how the problem can be overcome:

Even if a pretrial risk assessment is implemented, judges should have the power to override the verdict if they deem it correct to do so. The court administrator should provide their reasoning and detailed data, along with the results of the algorithms and justify it.

Although algorithms are said to be an improvement, some work has to be done. To improve their predictive accuracy, they should be used in joint conjunction with judicial discretion.

The risk score should take into account the defendant’s past criminal history and other factors that are personal to them. These should then be correlated against criminal records of the state as a whole, with no regard to racial prejudices.

A better way to define bail is to use commercial bonds. They allow everyone to post bail, irrespective of what background they belong to. In the eyes of DeLaughter Bail Bonds, all defendants are equal. Get in touch with us to learn more about our bail bond services in Indiana.